Friction: feature or bug?

The interaction model du jour is confusing

In a recent Substack post AI commentator Ethan Mollick described the inherent friction in the UX of chat interfaces built on top of LLMs (ChatGPT et al):

This doesn’t feel like working with software, but it does feel like working with a human being. I’m not suggesting that AI systems are sentient like humans, or that they will ever be. Instead, I’m proposing a pragmatic approach: treat AI as if it were human because, in many ways, it behaves like one.

This places tools like ChatGPT in stark contrast with the wave of successful AI powered products that preceded it (back when we called it machine learning, or ML). Those products were characterized by a relentless pursuit of the frictionless fulfillment of user needs and wants. AI (ML) helped super power this technique, which by design is at odds with the richness of many human interactions that LLMs simulate.

Why does it matter? We are in a hype-cycle, and for good reason. Generative AI is amazing. But, accompanying a hype-cycle is the inevitable breathless discussions of "AI for X." Unfortunately these conversations tend to gloss over important (and interesting!) questions about "product physics" that end up determining end user value.

Eliminate friction, get “passive” engagement

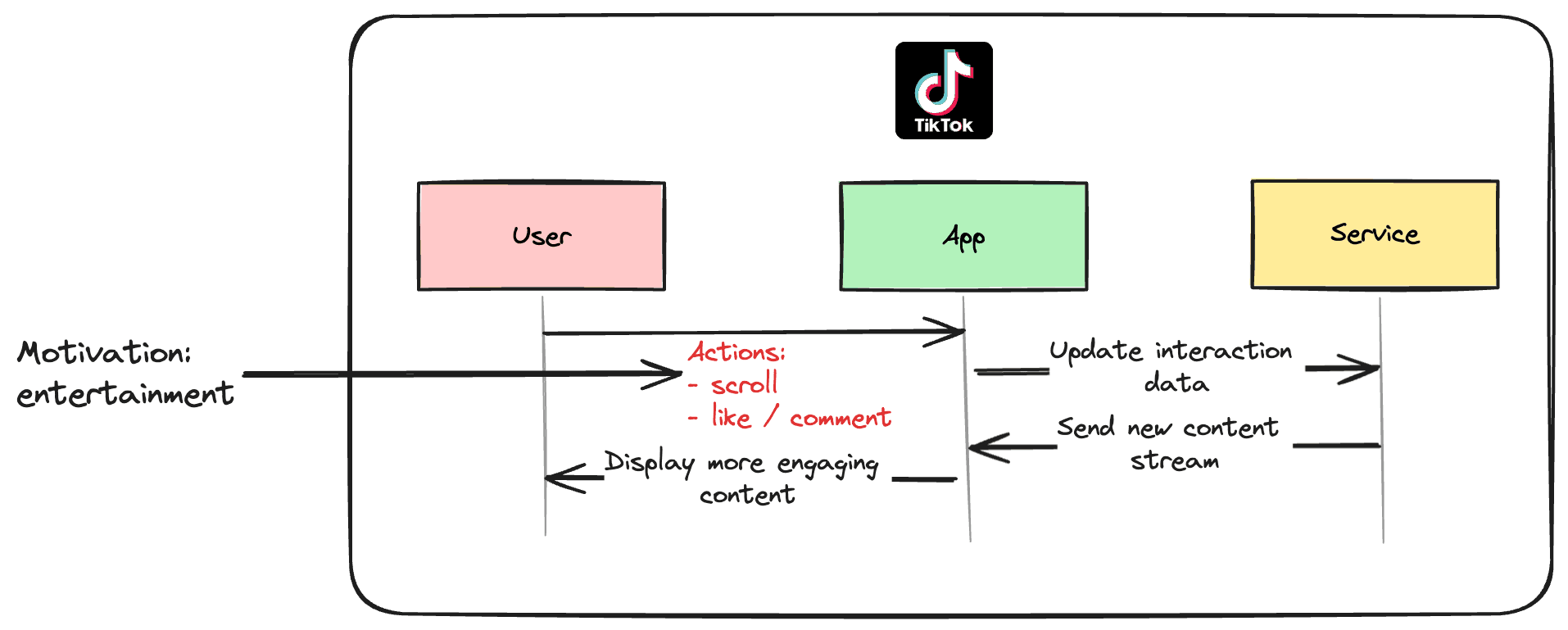

In the decade leading up to the ChatGPT “moment”, we saw an explosion of machine learning mediated user experiences. Here is an extremely crude, hand-wavy, and non-exhaustive characterization of the playbook for using AI (ML) to transform user behavior into more engaging user experiences:

- TikTok: dynamically transform user behavior (time spent watching, likes, etc.) into a personalized feed

- DoorDash: optimize suggestions and menu layout (as well as up-sell carrousel etc.), all with the goal of reducing the effort required to get a satisfying food order

-

Tinder et al (swiping phase): as users swipe the app is able to curate a sequence of potential matches that keeps the user engaged by maximizing the likelihood of a match (or entice users to pay for features to increase that likelihood)

Note: To a first approximation I think everything interesting about Tinder et al products is in the discovery and matching phase.

The through-line of these products was reducing the friction of satisfying some (near) universal need: entertainment, food, sex, etc.; less effort to get easier access to, or more of, the thing you came for. A slightly more refined observation is that the products above present a fundamentally passive engagement model. The product adapts to the user’s preferences. And it clearly works, recently a (very successful and smart) friend was extolling the virtues of TikTok. She described the joy of turning her brain off after a long day at work. Sometimes that’s what we want!

This is the “well-worn” playbook I referred to above. Eliminate friction to make the active component of engagement low effort, while the product still fulfills a desire.

More human, more work, more friction

In contrast, LLMs demand the exact opposite. To get the best out of them we need to thoughtfully prompt them. This is fundamentally not passive. Returning to the quote we opened with, the corollary of the humanoid capabilities is that we, as users, are required to be more human in order to engage with them. We must speak (or write) and think far more in order to (usefully) interact with these models, which is a lot more effort than scrolling!

I think this dynamic was nicely illustrated by a Tech Twitter (X) exchange over some “anecdata” from the field, which also tracks with up with my own private discussions. The demos are fantastically engaging to users, but the friction of guiding the LLM towards the desired outcome is very real and impacts retention.

The degree of end user adoption of AI is hotly debated, and difficult to analyze. If we suppose that this technology is indeed working for end-users, and I firmly believe it is, how do we "square the circle" of a somewhat clunky interaction model with what has historically been the recipe for successful digital products, i.e. reducing friction?

(Mini) Case studies

Let's take a stab at answering this by looking at how the LLM interaction model is presented to users by some of the more successful generative AI products that have come to market.

GitHub Copilot

GitHub copilot was arguably the first breakout product built on top of generative AI. The models were obviously less advanced at the time. and there is a great quote from Nat Friedman in a Stratechery interview where he articulates the challenges of building around a model that is frequently wrong:

How do you take a model which is actually pretty frequently wrong and still make that useful”? So you need to develop a UI which allows the user to get a sense and intuition themselves for when to pay attention to the suggestions and when not to, and to be able to automatically notice, “Oh, this is probably good. I’m writing boilerplate code,” or “I don’t know this API very well. It probably knows it better than I do,” and to just ignore it the rest of the time.

For the sake of this article the interesting product choice they made involved basically eliminating the "human" aspect of using LLMs. Their user was already coding, and they used that environment to feed the context window.

In this case the interaction model of an LLM didn’t really introduce friction. The product innovation “packaged” the generations as a “bonus” for the user, served inline. Reasonable people can debate the utility of the tool, but the product form factor they chose cleverly side-stepped the tradeoffs LLMs often present for product design.

Midjourney

Midjourney transforms a text prompt into an image, and it's apparently doing very well. It’s interaction model is typing prompts into a Discord server. The prompt interface is so clunky that there is even tools to generate the prompts, that are themselves clunky:

A few observations:

- There are extremely simple prompts that work on Midjourney, so users can "opt in" to more complexity with more elaborate prompts

- Drawing images is complicated, and so describing a “class” of image for which the model can generate an example is in turn very complicated

This is in no way a criticism, indeed this "clunky" interface is orders of magnitude cheaper than producing the image by hand. My suspicion is that these interfaces will accumulate more optional complexity over time as they find ways to expose the knobs and dials to users the way products like Office catered to ever more niche use-cases as they matured.

The friction associated with the interaction model represents a minimal and irreducible amount of complexity for the user to express their artistic intent.

ChatGPT

ChatGPT has been the breakout product of the generative AI boom. It’s a very hard product to analyze because it has such a diverse set of use-cases, and OpenAI releases virtually no data about how the product is used. Let’s lean on some anecdata about example use-cases to analyze the tradeoffs of the interaction model:

Coding

- For this use-case ChatGPT effectively replaces going to Stack Overflow (or similar) via a Google Search, and locating a solution. The generation takes things a step further by actually tailoring the answer to your specific use-case, which can work remarkably well.

- This represents a huge reduction in friction. The chatbot interaction model collapses discovering and tailoring the solution into a single query. It’s not always right, but the strength of the usage and retention strongly implies the "amortized analysis" is firmly in the black.

Translation

- I met an English speaker living Mexico that was using ChatGPT to enable him to conduct business in a language he spoke competently, but in which he lacked the reading and writing fluency required for business communication.

- Here ChatGPT is fulfilling the promise that Google Translate never quite lived up to. The reduction in friction comes from moving from a tool for more “literal” translation of short phrases, to a tool that transforms “meaning” from one language to another, maintaining a higher degree of fluency.

Search (open ended)

-

I’ve spoken to a lot of folks that use ChatGPT to get a grasp of a topic. There’s a nice example here from Lilian Weng, an employee at OpenAI, of using ChatGPT for explaining some topics related to an algorithm:

(Big thank you to ChatGPT for helping me draft this section. I’ve learned a lot about the human brain and data structure for fast MIPS in my conversations with ChatGPT.)

For cases where understanding the high level tradeoffs involved in some technical adjacency, this is a great tool.

-

The reduction in friction really comes from bundling discovery with synthesis. Instead of navigating to and skimming four Wikipedia pages, the reader gets the high level points at first ask, and can push on the thread. ChatGPT now links to sources for when the user feels like they need to go deeper, as of course ChatGPT is limited by what's encoded in the training or supplemented via the context window.

In each of these cases the interaction model, particularly bundling discovery and synthesis, provided a big reduction in friction for the user to get what they need. This bundling allows ChatGPT to combine a number of steps the user had to manually execute with a search engine and browser. The "clunky" interface is actually serving to reduce friction.

Character AI

This is also another difficult company to analyze, both because it’s a private company and because the public statements of the company and investors are likely to emphasize the less taboo use-cases of the product. That being said, the linked memo gets at the core value proposition (emphasis added):

But in the months since that fateful night, my conversations on Character.AI – a platform for creating and chatting with different AI characters – have turned from purely novelty question-asking into the back-and-forth of a meaningful relationship.

I can’t relate to the idea of having a meaningful relationship with a chatbot (though more power to those that can!), but this does relate very directly to our thesis: it’s an example of friction being a feature. The blog post describes the use-case of a “life coach.” The most frictionless way to get a lot of “life coach” type advice is just to Google information about diet, exercise, etc., but the value of a coach is often that they package this information up in the form of an ongoing conversation that resonates with the coachee.

The friction isn’t just a feature, it’s the core feature, and brings us full circle to the quote we started out with.

Conclusions

The examples point to a be pretty clear conclusion: in each case where AI is really working for users it’s because the “clunky” interaction model is either fusing a bunch of steps together to actually reduce friction (research, coding, etc.), is a key feature (relationships with bots), or has been to some degree sidestepped (copilots). Clearly this analysis was far from exhaustive, but it explains the observations reasonably well.

To come back to the motivation for writing this, I think the funniest example of "AI for X" was the Rabbit R1 unveiling when the presenter ordered an Uber, which left me firmly convinced that my smart phone is very good for ordering an Uber.

For companies and teams there is currently huge pressure to adopt AI, which brings with it the associated career incentives to plough ahead. But I suspect successful products and projects will be the ones that really wrestle with how well the interaction model fits the problem at hand.